Losing trust in "science"

New Pew Research polling data suggests that fewer Americans trust science or view it as a social positive.

This post is likely an exercise in stating the obvious, but nonetheless it’s worth seeing how polling such as these influence the discourse around so-called science and trust.

In a recent Pew Research Center poll it was unsurprisingly found that, among responders, there appeared to be a decline in trust among Americans when it comes to science.

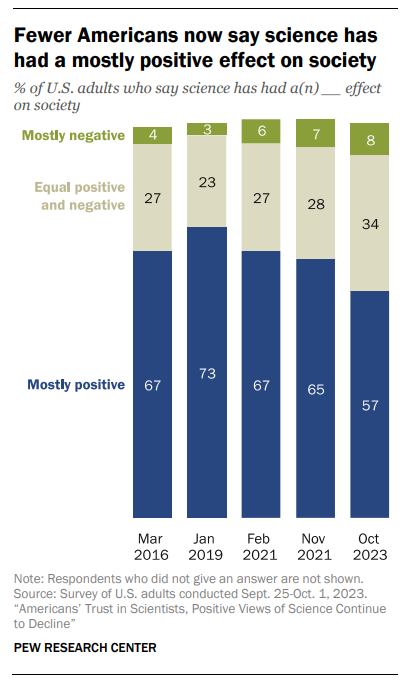

In particular, when responders were asked to rate how much of a positive/negative science has had on society there was around 57% of responders who said that science has had a “mostly positive” effect on society, marking an 8% decline relative to polling results from November 2021.

The shift seems to have been predominately made within the ambiguous category, but it’s rather interesting to see this decline, although it’s not too surprising given everything that has went on during COVID.

What’s rather surprising is that this decline seems similar across both Democratic and Republican responders:

Although there’s a larger number of Republicans who view science ambiguously or as an overall “mostly negative” on society compared to Democrats, it’s interesting that the trend has been declining even among Democrats who are argued as being the most ardent science supporters.

In some ways, this decline could possibly be driven by Black and Hispanic Democratic responders who seemed to show a far greater decline in trust relative to White responders who seemed to show only a mild decline over the past few years:

Asian Democratic responders were not included due to the low number of responders. The same is apparently argued for there being a lack of minority Republican responders.

Overall, Pew polling can’t provide us any explanations for why responders appeared to show an overall decline in trust towards science.

We can only surmise that many of the pronouncements of those in positions of authority did not sit well with a large portion of the American public. From lack of actual scientific rigor regarding masking or vaccines, it would come as a surprise that a subset of the population would be made wary of a lot of the COVID guidelines. It also didn’t help when science became heavily politicized- so much so that many otherwise unbiased science journals took it upon themselves to warn readers that they should vote for the more “scientific” president Joe Biden.

This has led many of the critiques targeted towards Republicans to be a reflection of ignorance and conspiratorial thought, such that Republicans are so dimwitted and unscientific that they would obviously vote for the most “unscientific” president imaginable. In reality, for anyone who had any semblance of rational thought they would realize that demonization of a large subsect of the population may in turn lead people to choose a contrarian president. It’s rather surprising how many people continue to assume that many Trump voters were inherently conservative or Republican, when in fact many of those critical of the vaccines and lockdowns but otherwise may lean progressive/Democratic may have chosen Trump.

It’s not too hard to imagine that when the upper echelons of science publications decide to tell people who to vote for during a presidential election, that many within the public would eventually turn on these outlets for trying to persuade public opinion. It may also cause people to question the overall veracity of these publications, which too have been questionable in the COVID-related papers that they have allowed to be published.

This was even reflected in an online survey conducted by The Conversation of all places, which noted that when people were provided information about science journals or articles that also included the journal’s stance on Trump/Biden that responders would rate these journals as less trustworthy (although this effect seems rather small):

And this is a general problem with interpretations of these types of polls. It becomes an easy exercise in misunderstanding, and extrapolating things that aren’t really there. More consequently, it may be an exercise in social engineering under false pretenses or may use such information to insert political ideology.

Consider this article from CNN in response to the Pew Research polling, which starts with this:

A physician friend recently shared something shocking. Her patient had been putting off a much-needed colonoscopy, despite a strong family history of colon cancer. The reason? The patient had heard online a false rumor that doctors were surreptitiously administering Covid-19 vaccines while patients were sedated — and she didn’t want to get the jab. No amount of discussion about why this would not happen could convince her.

We are seeing and hearing these types of stories across America. The rate of childhood vaccinations against dangerous diseases like diphtheria and tetanus have fallen in recent years — and non-medical exemptions to kindergarten routine vaccinations are rising. Climate change skepticism is growing. Reproductive health misinformation has been named the next “infodemic.”

It’s obvious that the premise here is one of conspiracy. We’re not even told much about what discussion went on with this patient, or why such a patient would come to this conclusion (this is barring anecdotes of people being vaccinated against their own discretion), so we can’t even tell what sort of conversation took place. We only know that this patient was allegedly unmoved in her opinion.

The problem I have with this sort of premise is that it reeks of appeals to authority. I can’t help but imagine this doctor starting her sentences off with “trust me, I’m a doctor"!” as if that would assuage a patient’s real concern over being unknowingly vaccinated. In fact, I would argue that the current climate has led more people to be wary of such remarks by medical professionals who weaponize their position to justify a “trust me, bro” attitude towards other people’s health.

And it’s this sort of perspective that leads these outlets and doctors to come to the erroneous conclusion that misinformation needs to be clamped down, rather than providing clear information and educating the public.

The CNN article also points towards utilizing different groups in order to better communicate information to the public, but again this leaves us to wonder how much these measures work to actual provide credible information. One serious flaw in “fact-checkers” or those who target misinformation is that they are inherently reactionary. That is, rather than educate people on why or how certain ideas are incorrect they mostly work to target technicalities or issue pronouncements of “that’s not true” without providing much clarity. They look for things that may seem incorrect and argue that those claims are not true, but don’t really do much to provide persuasive or robust evidence as rebuttal. Again, many times it becomes a battle of appeals to authority, or really a battle of white coat wearers (something that I have grown more critical of).

As to this example of social engineering, take the last few paragraphs from the CNN article and see how scientists will gain better trust among the public:

Social media can play a part, too. Work by the National Academy of Medicine, in collaboration with the Council for Medical Specialty Societies and the World Health Organization (with which Dr. Ranney was involved), outlined ways for social media companies to identify and amplify “credible health messengers” — both the professionals and the everyday folks who are volunteering their time to create content. We applaud companies like YouTube that have made this work a priority, and hope that more companies will follow.

By combining facts with stories, we can share tangible examples of how science and public health protect us, thereby increasing trust. It’s not just vaccines and therapies, but also clean water, clean air, anti-lock brakes, smoke-free zones, over-the-counter pregnancy tests and MRI machines. Each of these discoveries, policies and technologies helps keep us healthy and safe. And many of us have personal narratives of how and why we’ve been helped.

At the end of the day, if the United States is going to improve our trust in science, we have to ensure that we are all public health communicators. Sharing data with the public and building trust with communities is an essential part of science. It’s time for our training and our actions to reflect that.

Who’s to say what health messages/messengers or credible (a rhetorical question obviously)? Who will verify that the information being disseminate is credible? The problem is that many of these remarks are the same remarks that have led people to become less trustful of science.

It seems like an approach that is focused more on telling the public what is true and what to think above anything else. It’s certainly not going to be unbiased in their approach, but rather will likely target certain groups as being arbiters of misinformation, or certain groups (mostly “uneducated” and minority groups) as being susceptible to misinformation.

Keep in mind that as of now California is requiring children to take courses in media literacy due to a new bill that has just passed:

I personally don’t have issues in media literacy because misinformation can come from anywhere. That being said, I certainly don’t believe that California schools are going to be unbiased in their approach. I find it highly unlikely that both Fox and NBC News will be targets of misinformation. It’s another way to infer heavy biases while trying to seem scientific in their approach.

As an aside, I have begun watching several videos from How to Cook That. I don’t necessarily agree with a lot of the premises as I believe more reproducibility and more context could be used in certain videos. Regardless, it is at least a good example of understanding the need for media literacy, especially for young kids.

For instance, her videos debunking 5-min crafts and other content farming channels is probably worth viewing for young kids as she points out things in videos that show how manufactured and fake a lot of those videos are, such as a video about putting butter on shoes to remove their creases which also show different size shoes being taken out of the freezer compared to the ones that were put in, as well as a drip cake hack using a hair dryer which magically showed the melting cake to not be melted in the next frame.

Probably a rather obvious one are these newfangled popcorn tricks such as mixing Skittles in with unpopped kernels and expecting a rainbow treat rather than a burned, blacked, unpopped mess:

Most adults may look at these videos as obviously fake hacks, but bear in mind that many of these videos are aimed towards kids, and unfortunately many kids may try these hacks without knowing how much editing goes on to fake the end results. In some instances the hacks can be considered dangerous, such as some hacks for colorless strawberries which include soaking strawberries in bleach for hours, or hacks to microwave eggs to cook them which have seriously harmed both children and adults.

Social media is inundated with all sorts of misinformation and dangerous tips, tricks, or advice that can be harmful to kids, and yet these same social media platforms have not done anything to curb these forms of harmful information, and instead find it necessary to clamp down on alleged conspiracies related to vaccines and supplements, which may be mostly targeted at adults. I guess adults need more protecting compared to children.

It also seems as if a political spin, rather than a broader approach towards medial literacy, may be taken given the examples provided by the USA Today article:

With so many young people online − more than 90% − legislators said social media will continue to reach and influence them.

A huge part of the problem, the bill’s text argues, is that many young people can’t tell the difference between ads and news stories. Citing a study out of Stanford University, Berman said in the bill’s text that 82% of middle schoolers couldn’t tell the difference between ads and news stories.

On his website, Berman also cited a 2019 Stanford University study in which more than half of the high school students surveyed thought a “grainy” video that claimed to show ballot stuffing was “strong evidence” of voter fraud in the U.S.

The video was filmed in Russia, Berman said.

And with misinformation on the rise, the bill will seemingly give legislators a way to combat online misinformation and its interference with democratic decision-making and public health.

Berman said in the bill’s text that before this bill, there were no lessons put in place to help students become more information-literate. With this bill signed into law, students from kindergarten through 12th grade will have the opportunity to brush up on their media literacy skills, the bill says.

Again, note that there are many areas where media literacy would be vital for kids, especially when it comes to content that are being put out by content farms. But rather than target these bits of misinformation, legislators find it more prudent to target fake videos on ballot stuffing- as if these are the most pertinent issues to high schoolers. Wouldn’t this approach be better served at critiquing social media trends?

I would suggest readers check out the entire polling data. But overall it appears that what’s happening is that there seems to be some alleged problem brewing regarding lost of “trust” in science, with no clear attempt to understand why trust may be on the decline. It’s certainly apparent that the answers being provided aren’t really going to do much to actually combat the problems these people are presenting, but rather are likely to influence younger generations into a thought process that lacks critical thought.

If you enjoyed this post and other works please consider supporting me through a paid Substack subscription or through my Ko-fi. Any bit helps, and it encourages independent creators and journalists such as myself to provide work outside of the mainstream narrative.

Very interesting! Science itself, IF it is "real" science isn't negative, because it is simply a method for investigating things and increasing human knowledge. However, it is currently being weaponized against humanity by twisting it into a fake system that masquerades as science but is designed to disseminate falsehoods. This pseudoscience is nothing but manipulative propaganda! And the average person is not sufficiently familiar with the Scientific Method to be able to easily spot when they are being deceived, so it is quite understandable that they are "throwing the baby out with the bath water" and ceasing to trust science in general!

needs to be more severe penalties for corruption in science... when 60% or more studies turn out to be false in some way there is a big problem. Papers should not be allowed to be cited until the study has been reproduced by others. The amount of retractions is ridiculous.