"Confidence in Science and Misinformation"

Elsevier, in partnership with Economist Impact, surveyed researchers around the world to ask about their views on science and misinformation. The results, of course, are rather obvious and telling.

Recently Elsevier, one of the world’s premier science journals, released a global survey conducted in partnership with various science organizations and an organization called Economist Impact.

I’ve never heard of Economist Impact, but going to the website you’ll immediately find this:

Economist Impact combines the rigour of a think-tank with the creativity of a media brand to engage an influential global audience.

We partner with corporations, foundations, NGOs and governments across big themes including sustainability, health and the changing shape of globalisation to catalyse change and enable progress.

As well as this video:

People are free to make comments about globalization and influence here, but similar to Oster I just wanted to see who the heck these people are leading these surveys (I will provide some context as to why I do find this joint venture concerning below).

The survey asked thousands of researchers1 from different disciplines and in various positions (i.e. teaching, research, etc.) to respond to a select number of questions pertaining to science research, and whether their perspectives have changed due to the pandemic.

The survey itself is rather broad, and even the summary is quite long, so I’m going to highlight a few key points here.

Although the survey covers a few topics in research, you’ll note that the focus will generally revolve around so-called “misinformation”.

Of note, here’s Elsevier’s remarks on the survey:

The fact that the pandemic had such a significant impact on the research community is not a surprise, but the extent and nature of that impact on the prevalence of misinformation, the fundamental role that researchers play in society, and on inequalities is noteworthy.

Perhaps most notable of all is the imbalance between awareness and understanding that has emerged through the pandemic. Public attention on research has increased, bringing greater recognition and appreciation of research, but public understanding of how research is conducted has not risen in parallel. The call to equip researchers with the skills they need to communicate research with more clarity and confidence has come through in this study loud and clear.

Interesting.

And the “Key Findings” can be summarized below for those wanting a quick rundown:

It’s a pretty apt survey to come out with the things going on at Twitter and a sudden resurgence in arguments over cracking down on so-called “misinformation”, although this argument isn’t being localized to Twitter alone and seems to have popped again (maybe in time for today’s midterms?)

But with that aside, the survey appears broad and ambiguous.

For instance, one survey question asked people the importance of separating good quality research from misinformation.

What exactly is “good quality research” here, and what exactly is “misinformation”?

The survey provides this bit of information for some context (emphasis theirs):

Over two thirds (69%) of researchers surveyed say that the pandemic increased the importance of separating good quality research from misinformation. According to the researchers we spoke to, these concerns stem from a combination of the misuse, misinterpretation and miscommunication of research and, in particular, the research process. While some saw the pressure to publish preprints as adding to these concerns, others argued that peer-reviewed journals themselves were not immune to publishing poor quality research.

Almost four-in-five respondents (78%) believe that the pandemic increased the importance of science bodies and researchers in explaining research findings to the public, suggesting that researchers see it as part of their role to bring clarity and counter misinformation in the public sphere.

There’s certain to be a ton of bias here, insofar as what is perceived to be “misinformation” would be highly subjective, and even more in favor of things that may stray from scientific consensus or were critical of the narrative portrayed by the science community.

Paradoxically, the comments above suggest that there are concerns over misinterpretations and miscommunications in regards to the research being conducted.

I actually would find this to be a rather apt remark, in that both sides may suffer from biases that may cause one to seek out the answers he'/she desires rather than see what information is out there and weigh each one both individually and collectively. There’s also the fact that many people would rather be told what to think rather than how, which extends into how science research is conducted and the methodology required to reach the end results.2

With that being said, it’s clear that notions of misinformation here aren’t really pertaining to everyone and a broader sense of providing better understanding in regards to scientific endeavors, but rather the select few who, once again, decided to deviate from the COVID narrative.

When asked what role scientists play in society, researchers were more inclined to argue that their role is to combat false information than in pre-pandemic years (note the portion emphasized below):

The pandemic may also be prompting changes in how respondents see their primary roles in society. When we asked survey respondents about what they see as the important aspects or functions of their role in society—before the pandemic compared with today—we see that respondents still feel that their role mainly involves “educating others” within their field and “enabling innovation”. However, since the pandemic—when fake news and conspiracy theories came to the fore, affecting vaccine adoption and hindering efforts to save lives—our research suggests an increase in the number of researchers who want to play a bigger role in countering misinformation and, in turn, engaging in more public communication (see Figure 4).

It’s the remarks here about “fake news and conspiracy theories” that really encapsulate what this whole survey is trying to portray.

No surprise there, as for all intents and purposes this is a survey conducted more in the vein of things many of us have become accustomed to seeing.

It’s made more worrisome given the fact that Elsevier, one of the leading science journals, decided to partner with a supposed think-tank in order to convey this message of needing to clamp down on misinformation—more on that later.

Now, back to the role of scientists in society, or what I would consider to be a a more appropriate measure of the hubris of scientists, one survey question asked researchers to see their role now compared to pre-Pandemic years.

Note here that the legend appears to have been mislabeled3:

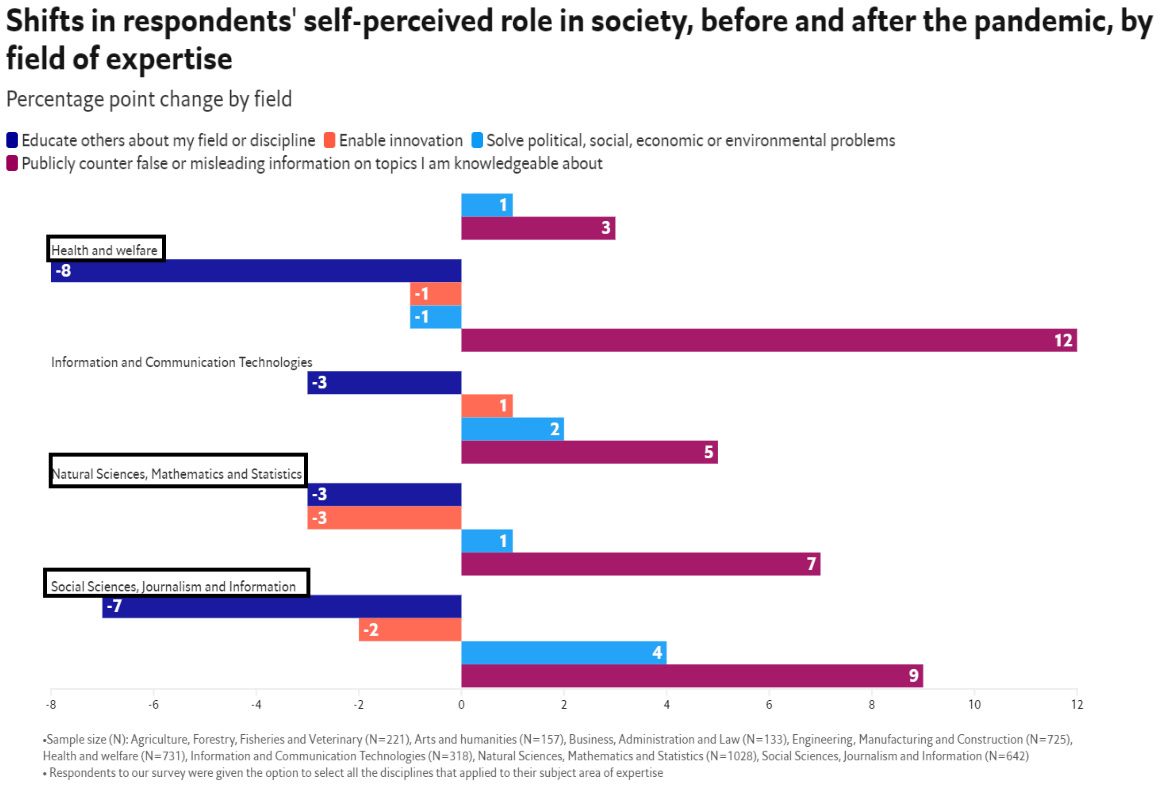

Now, pair this with the next figure which stratifies these self-perceived roles based on discipline and the results are rather telling:

Note that the numbers here reference changes compared to previous years (i.e. negative means a decline in perception for that role now compared to pre-pandemic years).

What’s striking is the paradoxical finding that, for those in the medical field and social sciences/journalism, there was a noticeably large decline in “educating the public” with a large increase in those wanting to “combating misinformation”.

I find these results rather hypocritical given the prior survey results, in that researchers are both arguing that they should increase their communication of scientific findings to the public while also suggesting that educating the public on their discipline is less important of a role compared to fighting misinformation (at least relative to previous years).

More importantly, one can argue that the need to fight misinformation is a reactionary approach to science rather than a proactive one. As the old saying goes, knowledge is power, and so why would researchers find it upon themselves to fight misinformation rather than providing the information in an unbiased manner which can help educate the public? Why not educate people rather than worry about there being misinformation to react to?

This is also made more paradoxical given this excerpt that so-called “misinformation” may actually cause researchers to be more careful in providing caveats and limitations to their research, something that I would actually argue is often missed in reporting of studies or is generally understated.

However, I do find that the remarks about selecting research topics more in line with the public interest a bit concerning, given the fact that there’s a large divide in what the public wants and the public’s knowledge of given topics. Think of Theranos, the vaccines, and environmental research which bolstered public support through false marketing and manipulated data in order to provide the necessary incentive to then push for further policies and research into these avenues, almost like a scientific Ouroboros.4

Key Takeaways

Probably one of the most telling surveys, which helps to explain this situation, is the following question in which researchers asked what the main determinants are for politicians to consider research relevant to policymakers.

Of course, the top two main determinants (when divided among different regions) are whether the policymaker has a personal connection to a researcher, or what the institutional affiliation of the researcher is:

It’s almost like they’re suggesting a somewhat incestuous relationship between scientists and policy makers is well-known among researchers. More than the actual quality of the research (which is the lowest determinant in at least North American and Europe) the fact that science that gets adopted into policy is perceived to be mostly determined by how close ties are between the science and policymakers (or their investment portfolios most likely) is an egregious failing of science.

To the extent that policies may be determined by how much politicians can get out of such policies is not only a gross bastardization of science, but it’s also a complete misuse for nefarious endeavors. It also explains why people such as Fauci held such weight in regards to COVID, where once the NIH may have been a mere presence of suggestion it now became a government body of tyranny and enforcement.5

And this appears to be the general intent of this joint venture and creation of this survey. Rather than gauge the perception of scientists and create open discourse, this survey appears to be done more in the vein of validating the censorious efforts of both scientists and politicians in order to clamp down on perceived “misinformation”.

It’s purpose appears to be more to serve as a way of providing reasons why misinformation is so dangerous, and why global measures may need to be taken to properly address such issues.

The fact that Elsevier, one of the biggest science journals around, joined with Economist Impact to pursue this survey doesn’t bode well for any intention of this survey being done in good-faith, or for the actual pursuit of science rather than to portray the dangers of dissident voices.

If you enjoyed this post and other works please consider supporting me through a paid Substack subscription or through my Ko-fi. Any bit helps, and it encourages independent creators and journalists outside the mainstream.

The term “researcher” here is rather broad, and it includes professors, those conducting research, those who are still in school, and so on. So keep that in mind when considering the makeup of this group.

One survey question asked researchers to rank the top 3 things that make someone confident that the research they are citing is reliable. One answer suggested that methodology was a pretty big factor. It’s rather curious none of the researchers were asked whether the results of a study would lead one to be more likely to cite a study. The fact that “peer-reviewed” is so high is also of concern, especially given that accessibility of data used in the study was rated so low.

The comment following this Figure (4) states that the role of scientists in fighting misleading information went up to 23% compared to 16% previously, which would infer that the legend has been mislabeled. Quite ironic that a figure in misinformation itself has some misinformation.

Keep in mind that many researchers included in the survey were actually concerned about how much understanding the public has about science, as noted in the following excerpt:

It is also clear that among the researchers we surveyed, there is some frustration about public understanding of science and research. When looking at the ways in which this attention has manifested, far fewer respondents agree that the pandemic has led to a better public understanding of the research and peer-review process (38% agree). Based on the outcomes of our roundtables and interviews, however, this is not just about how the public understands science; it also stems from the difficulties that researchers face around communicating the uncertainty associated with the practice of research.

Ultimately, both researchers and the public want to have confidence in the research being shared. Confidence in research therefore depends on a stronger foundation between the research community and the public. A common theme that emerged from researchers in the roundtables that we observed was that this required a two-way dialogue and a framework for ethically communicating research, not simply more press releases from university departments.

So there’s more disjointed arguments being made here. Maybe scientists all have different opinions that can’t be measured in a survey alone, or maybe many scientists and those in high places don’t know how to properly interact with the public and have that necessary engagement.

That is not to say that the NIH has never been a body of tyranny, but that it became quite apparent how much power was provided to the NIH compared to previously in lieu of COVID.

Publishers mostly captured by government desired narrative.

The “Science” is completely corrupt.