Does anyone bother reading studies?! (rant)

Another Ivermectin study means more pontification rather than proper analysis.

There is much to be said about how information, in particular within the sciences, gets disseminated to the public. The open source intents of COVID has given the public a rare glimpse into the research process and how clinical trials are designed. At no other time can I remember that so much information was freely accessible to the public, and I was extremely encouraged into believing that this would mean far more people would be interested in understanding science and research methodologies.

However, much to my chagrin, that doesn’t appear to be the case. In fact, it seems even more obvious that many people are reporting on topics that they not only are uneducated in, but are creating bold assumptions and pontificating when there is no evidence to support such ideas.

There is no better example of this than with Ivermectin. If one study proves its effectiveness, its proponents may stay silent about methodological flaws. If it disproves Ivermectin, its detractors will overlook the same flaws that may be seen in positive studies.

What’s even more worrisome is to see that, at a time when trust in news and media outlets are at an all time low, people still turn to news outlets to report on the results of such studies. How can it be that those who are critical of the news will turn to the news to get their reporting on a study? Do you know if they are reporting accurately and without bias? Or is this a quick way to get views and push out a narrative?

This effect is more widely known as the Gell-Mann Amnesia Effect, in which someone who is an expert on a topic will clearly notice the faults on reporting of said topic. However, when it comes to topics that one is not educated in people may completely take the word of the news outlets. When we know the facts, we know they’re lying; when we are ignorant of the facts, we are trusting of their reporting.

At any point in time people will be trusting of a news media they know full well should not be trusted. If you can’t trust them to report accurately on what’s going on in Ukraine/Russia, can you trust them to report on the results of an Ivermectin study accurately?

And why is it, at a time when all of this information is freely accessible to everyone, do people not turn to the actual article and read it for themselves? Instead, I am finding more people pontificating on studies based on reports from people who should not be trusted and may not have read the study properly in order to provide a narrative, biased opinion on topic they are likely to have no knowledge of.

So let’s get into it.

A recent study came out suggesting that early treatment of Ivermectin was ineffective. Of course, news outlets and various Substacks were quick to pounce on this study as validation for Ivermectin’s ineffectiveness sparking another online debate. Again, I must ask all of you- how likely do you think it is that any of these people read the damn study?!

In case anyone would like to read it for themselves, and I absolutely encourage everyone do so, the study was conducted by Reis et. al. and was part of the TOGETHER trial. For those unaware, the TOGETHER trial was a group of studies looking at repurposed drugs for COVID including Ivermectin, Lopinavir/Ritonavir, and Fluvoxamine, so this study is nothing new- this is only the reporting of the full study which was likely released at various interim stages.

Here are some aspects of the study:

Like all other TOGETHER trials these studies were conducted in clinical settings in Brazil.

The study was conducted between the months of March 2021- August 2021

Inclusion criteria included those over the age of 18, tested positive within 7 days of symptom onset, and and at least one high-risk factor (over 50, heart disease, obesity, etc.)

Ivermectin group was provided 400 ug/kg of body weight per day for 3 days; placebo group was provided sugar pills for 1, 3, 10, or 14 days. Patients were told to take their medications on an empty stomach.

Originally, researchers intended to provide only 1 day of Ivermectin, but changed to 3 days due to Ivermectin advocates.

Participants were shown a welcome video with information on the trial, information on Ivermectin, adverse events, and follow-up.

Outcome data was collected either in-person, by phone or through Whatsapp.

Right off the bat there are some points of concern with this trial procedure:

Timing matters: It’s far more obvious now that the earlier the treatment the better. Some many argue that 7 days is far too long of a window to consider as early treatment. The results of these studies need to be taken with the caveat that inclusion was up to 7 days of symptom onset.

Variant Matters: Again, more transmissible and virulent variants require different approaches to treating the virus. This became obvious with the results of the Molnupiravir trial which showed that Delta greatly altered the effectiveness of the drug. Studies that do not take into account the variant that is being treated is overlooking a critical aspect of providing a therapeutic agent. The treatment regimen must match the disease/variant.

Heterogeneity: This study was considered a randomized, double-blind study and yet the treatment regimen was different between the two groups. Why was the Ivermectin group only provided one set dosage while the placebo group was offered 4? Clearly there is risk of unblinding patients to their treatment group based on this aspect alone. How did the researchers keep this from happening? Remember that the results of the 77 participants that were provided only 1 day of Ivermectin were not included.

Introducing Bias and Influence: If the researchers were shown an informative video on Ivermectin, the researchers need to indicate what was included in that video. Were participants told that Ivermectin is an anti-parasitic? Were they told that research is ongoing with Ivermectin? Did they make any mention of the effectiveness/ineffectiveness of Ivermectin? Obviously this would alter the perception of participants to Ivermectin. We know full well that the placebo/nocebo effect is real and yet the researchers may have unwillingly introduced bias into the study.

Inconsistent Data Collection: Three different approaches were taken to collecting data outcomes. Although phone calls and WhatsApp may make it easier to contact participants, it’s clear that this approach will affect the outcomes that are being reported.

Hospitalization and Hospitalization Proxy: One of the biggest factors overlooked in this study was the factor of hospitalization. Hospitalization sounds straightforward; it would indicate that a patient would require hospital care, oxygen, and other invasive interventions. However, hospitalization here was used to be a very broad term, and in fact was defined as observation over 6 hours, not even an overnight hospital stay, which means the inclusion factor for hospitalization appears far too broad to provide anything of relevance:

The primary composite outcome was hospitalization due to Covid-19 within 28 days after randomization or an emergency department visit due to clinical worsening of Covid-19 (defined as the participant remaining under observation for >6 hours) within 28 days after randomization. Because many patients who would ordinarily have been hospitalized were prevented from admission because of limited hospital capacity during peak waves of the Covid-19 pandemic, the composite outcome was developed to measure both hospitalization and a proxy for hospitalization, observation in a Covid-19 emergency setting for more than 6 hours.

Edit: I forgot to mention that Ivermectin is a fat soluble molecule and it is suggested that people take Ivermectin with food. An empty stomach is likely to affect the bioavailability of Ivermectin and that factor can greatly affect the results.

There’s some more I can say but I’ll instead encourage people to read the study and see any other faults. Overall, there are plenty of things to be critical about with this study, but there will always be things to be critical about in any study.

What I’m finding more concerning is that no one appears to be interested in at least examining any of the studies and understanding the scope of any study. Instead, people seem more interesting in rushing to push out information regardless of the factual basis of the information.

Even more concerning is that this is happening with greater intensity on Substack. Many people who are question the mainstream media are either citing news outlets or are not even reading the studies they are citing. It’s becoming quite disheartening to see the same tactics that we malign legacy media with may be finding their ways onto Substack in an attempt to garner greater engagement or viewership here.

The need for greater factual information is at an all-time high, and yet in some aspects people are still blindly trustful of whatever they see. Just because a news source is alternative or independent does not bar them from the same criticisms we see occurring in establishment media.

So here are a few points worth considering:

Take nothing at face value: Never use trust as a validation to not be skeptical. View all news with a discerning eye and never take someone’s word as being the definitive truth, and this obviously goes for me as well!

Do your own research: What’s the difference between a homemaker who has never went to college and a recent PhD graduate? 8-12 years of formal education. That’s it. With all of the negative aspects of COVID, one of the greatest endeavors has been to see the collaboration of many fields of science in an attempt to collect and disseminate information in regards to SARS-COV2. This provided many laypeople the ability to see into the window of science and research. Unfortunately, even with open access to so many journals many people are still viewing science from the outsider’s perspective rather than getting knee-deep into studies. There’s nothing more empowering than becoming informed and knowing that you are fully capable of doing your own research. You’ll obviously falter, but lean into those times when that happens and continue to seek out information. The best time to learn was yesterday, the second best time is today.

Statistical Significance vs Clinical Significance: Most clinical studies use statistical measures to find significance. This type of significance tells you that the effects or results seen are not random or due to chance, but are likely to be attributed to the therapeutic or intervention. But here’s a big issue; statistical significance can be found for anything, but the real world significance may mean nothing. Let’s say a longitudinal study wanted to see if saying “hi” to strangers 5 times a day would increase longevity. The research lasted for 10 years and found that saying “hi” increased life expectancy by an average of 10 minutes and these results were found to be statistically significant. Statistical significance here would tell us that saying “hi” to people is likely to increase how long we live, but does it really matter if the effect is only an additional 10 minutes in the greater scheme of things? This is the big difference between statistical significance and clinical significance. Where statistical significance wants to find causal relationships through statistical measures clinical significance provides a more qualitative value. Clinical significance indicates whether the effects seen are of clinical value- certainly prolonging someone’s life by 10 minutes serves no clinical value. So even though a drug may not show statistically significant effects, for some patients it may still prove therapeutic, and when these drugs have low toxicity does the statistical significance really matter? This is the battle that many clinicians who prescribe Hydroxychloroquine and Ivermectin ascribe to; whether the drug is statistically effective may be meaningless if they know that the patients they are overseeing are showing improvement. It’s a battle between the significance of the two that has ravaged the COVID discourse so greatly.

Results are always relative: Instead of saying that Ivermectin is ineffective, what would you make of the results if it stated that “Ivermectin, when given at varied symptom onset timings against Variant X at dosage A among patients with various comorbidities, when compared to a placebo group with varied dosage, was measured based upon the incidences of hospitalization as defined as clinical observations longer than 6 hours, had outcomes measured based on in-person meeting, phone calls, or tele-conference meetings, suggest that the effects of Ivermectin were minimal according to statistical measures relative to the placebo group and the variables used to measure said outcomes and control group.” Okay, that’s not quite a charitable interpretation but always keep in mind that results are never, ever absolute! Results of studies should always be interpreted within the greater scheme, and done with proper comparisons and relativity. Heterogeneity plagues a lot of clinical research and is a huge thorn in meta-analyses. The results of one study are dependent upon the variables, limitations, and relativity of clinical procedures. The same happens when comparing one study to another. Never trust someone who speaks so absolutely about the results of a study without providing the caveat of relativity.

Apologies if this rant seems unhinged, but I know so many can do better and that many readers should expect better. We learn nothing when we don’t put in an effort to find the facts and investigate the information or even bother to criticize those who many not be acting in the best interest of readers.

Always stay skeptical and view everything you see with a discerning eye. And more importantly become an informed individual and do your own research.

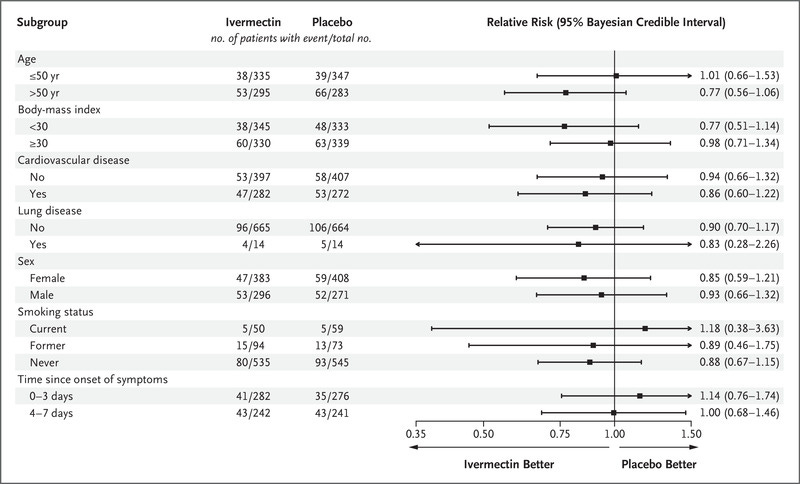

Edit: Fortunately the researchers here provided some stratification of their results. When looking at timing they found that treatment within 0-3 days of symptoms with Ivermectin did not show any benefit. Again, although there was stratification you must keep in mind that variables will also affect outcomes even if people are treated early. Keep everything within context.

As a physician with MPH and MS degrees in evidence-based medicine, medical informatics, and biostatistics, I totally agree with you critique of other "expert" analyses in substack. As you suggested, I downloaded and read the study. This RCT was a poor quality study, IMHO, with 12 different treatment centers for the 679 patients who received IVM and with no analysis of secondary outcomes like adverse events or death. I am happy to see the breadth of your analytic critique. Your critique is very much on-point.

The value of RCTs are over-rated since they generally are restricted to early outcomes and do not evaluate late events like adverse events, long-COVID type of events, and events associated with vaccination, which was not even addressed in this RCT analysis. It is truly sad that so many people do not read these studies or have the expertise to critique them. Medicine is a very complex science that is often over-simplified in critiques I have read from others.

Thank your for your thoughtful input.

I also read a substack today where the author has previously expressed bias against IVM and clearly regurgitated something today that he read regarding this particular study. I’m not very good at data and statistics and I know people easily can use these to mislead people. In this particular study, the method seemed quite flawed for the reasons you stated. Also, as a provider, I don’t prescribe IVM without also making sure the patient is in zinc, and vitamin c and d3. Also, if they have Comorbid conditions, their prescribed dose is higher and generally IVM Is prescribed for 5 - 7 days, not three. IVM is also supposed to be taken with food. ( perhaps you mentioned this). It’s good to read and try to discern truth. I’m so skeptical about everything!